Computer Graphics

Research Scientist | Software Engineer

CraigDonner

Research Areas:

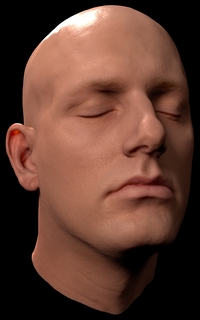

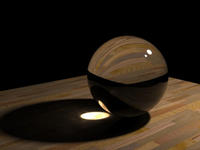

Appearance Modeling

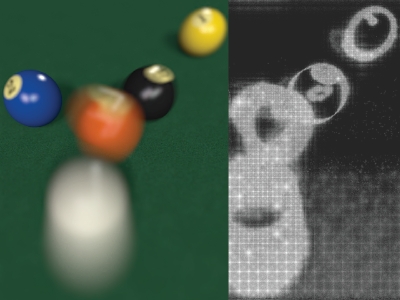

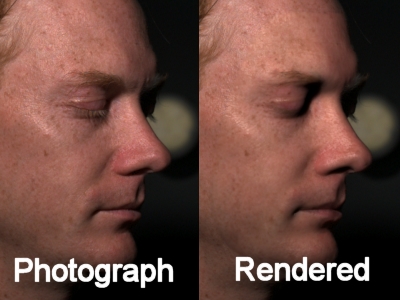

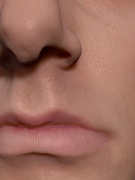

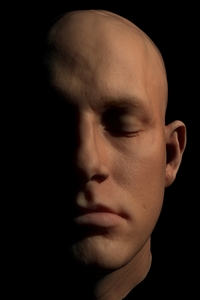

Describing the appearance real physical materials with new models and algorithms.Photorealistic Image Synthesis

Developing new techniques to generate images indistinguishable from what we see around us in the natural world.Efficient Light Transport Models

Developing efficient, practical, and accurate models for light transport in complex materials.Measurement Techniques

Designing methods for accurate measurement of real world material characteristics for use in various scientific fields.Bio:

I was always fascinated by the appearance of the world, and later researched and created

tools for acquiring appearance characteristics

as well as more general light transport and rendering methods. In 2012, I joined

Google, and have worked on everything from Google Earth, to Cardboard,

Virtual Reality, and Daydream. Over the years I've built small systems, tools and also rolled out

Google-scale infrastructure, and enabled research in journals such as Nature. I've led teams as small as a handful and as large as many dozens. At the moment, I direct the engineering of

DeepMind's Science group in London, UK.

Before Google, I ran my own shop called Leolux that created custom rendering software and tools for

the realistic rendering of biological materials.

Before that, I was a Postdoctoral Researcher in the Computer Graphics group at Columbia University,

with Professor Ravi Ramamoorthi,

from Fall 2007 through Fall 2009.

Before joining the lab at Columbia, I was a postdoc in the Graphics group of the

University of California, San Diego,

where I also did my Ph.D. under Professor Henrik Wann

Jensen.

Publications:

Science 389.6764, 1012-1015, 2025

arXiv 2406.06718, 2024

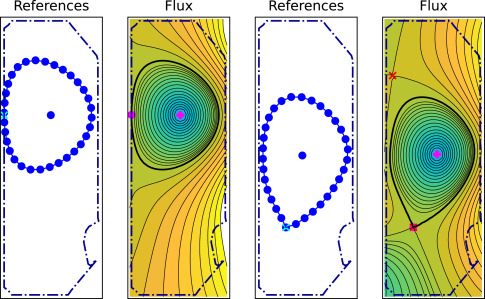

Fusion Engineering and Design 200:114-161, 2024

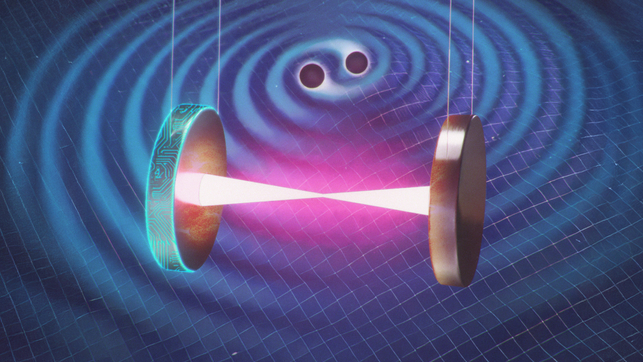

Nature 602:414-419, 2022

Nature Physics 16(4):448-454, 2020

ACM Trans. Graph. 29:6:1-141:1-10 (Proceedings of SIGGRAPH Asia 2010), 2010

ACM Trans. Graph. 28:5:1-140:1-12 (Proceedings of SIGGRAPH Asia 2009), 2009

ACM Trans. Graph. 28:3:1-30:1--10 (Proceedings of SIGGRAPH 2009), 2009

ACM Trans. Graph. 27:5:140:1-12 (Proceedings of SIGGRAPH Asia 2008), 2008

ACM Trans. Graphic., 27(1):1-11, 2008

In Rendering Techniques (Proceedings of the Eurographics Symposium on Rendering), 2007, 234--251, 2007.

In ACM SIGGRAPH Sketches and Applications, 2007

In Rendering Techniques (Proceedings of the Eurographics Symposium on Rendering), pages 409--417, 2006

ACM Trans. Graphic. (Proceedings of ACM SIGGRAPH 2006), 25:1003--1012, 2006

ACM Trans. Graphic. (Proceedings of ACM SIGGRAPH 2006), 25:1013--1024, 2006

In ACM SIGGRAPH Sketches and Applications, 2006

J. Opt. Soc. Am. A, 23(6):1382--1390, 2006

Opt. Lett., 31:936--938, 2006

ACM Trans. Graphic. (Proceedings of ACM SIGGRAPH 2005), 24(3):1032--1039, 2005

In ACM SIGGRAPH Sketches and Applications, 2004

In Proceedings of Graphics Hardware, 2003

Winner: Best Paper